Bringing back IRIS By Lowe's - Arcus Smart Home

Lowe's first entered the smart-home business in 2012, utilizing a system based on AlertMe. In 2015, this was replaced with "IRIS v2", a rewrite of the existing platform in Java, now known as the Arcus Platform. Both platforms supported Zigbee and ZWave devices, with AlertMe, Centralite, and GreatStar working as OEMs to produce devices compatible with the platform. IRIS also supported custom integration for products from Honeywell, Lutron, and others.

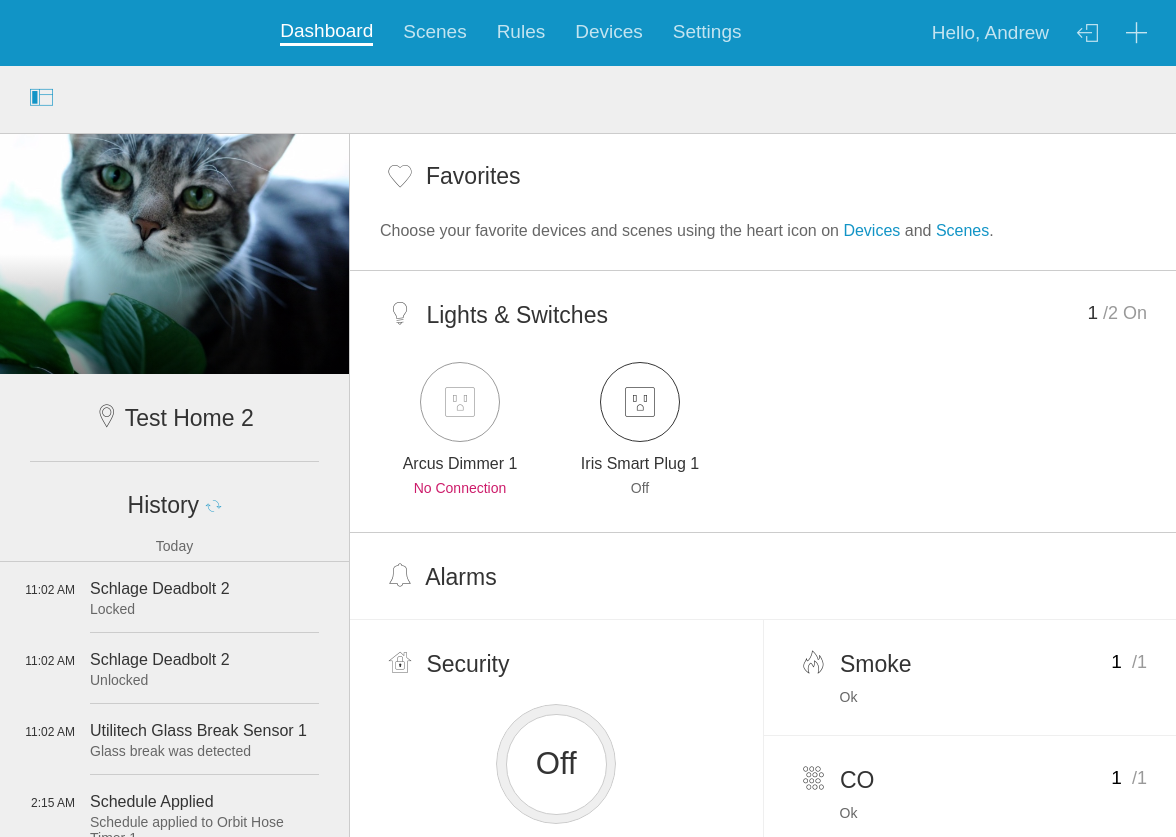

What distinguished IRIS from its competitors was the combination of home automation and home security, with a user-friendly interface, and simple task-driven rules engine. This offering later offered central monitoring, much like Ring does today. As part of a shift in direction for Lowe's under Marvin Ellison's, IRIS was originally put up for sale in 2018, and finally, a plan to shutdown IRIS was formed in early 2019. In January 2019 Lowe's announced they would be exiting the Smart Home business and shutting down IRIS on March 31st, 2019.

The last few years have seen a rocky road for smart home products, with Best Buy shuttering its line of Insignia brand of smart home devices, Google closing the "Works with Nest" program, Stringify shutting down, and many others. While most of these shutdowns result in customers being left out, Lowe's made some effort to right the situation, by offering refunds to customers through a redemption process. However, Lowe's strategy did not end here. Along with the shutdown announcement, Lowe's announced that they would be open-sourcing IRIS, and in the following months, the source code was made available on GitHub. On April 1st, 2020 the IRIS platform was shut down, leaving customers with a non-functional system. Many users migrated to offerings from SystronicsRF, Hubitat, and Samsung SmartThings. Some users on the Living With Iris Forums discussed what they would do if IRIS came back, but little came of it.

Setting up Arcus Smart Home

Shortly after IRIS was shut down in April 2019, I started looking at the code on GitHub. As part of open sourcing IRIS, Lowe's included most of the code but omitted any documentation. Fortunately, a few days later a few architecture diagrams were committed, which served as a starting place for better understanding the Arcus architecture. IRIS was originally hosted on Apache Marathon, but the configurations for Marathon were very specific to the deployment and not included as part of the open-source release. Command-line tooling for setup on developer machines was included, but the configuration options and setup process were not included. As a result, it took a few days of reading through the code and troubleshooting to reach a system that could be considered "working".

By April 7th, I had a working Arcus deployment and was able to start testing. Initially, I focused on basics like the IRIS Smart Plug, IRIS Contact Sensors, and IRIS Keypads, to further validate the system and evaluate what was working. Many portions of the code were unmodified from the original IRIS offering and were roadblocks to usage, for example, IRIS prompted users to determine if they were eligible for "Pro Monitoring" before they were able to set up an account.

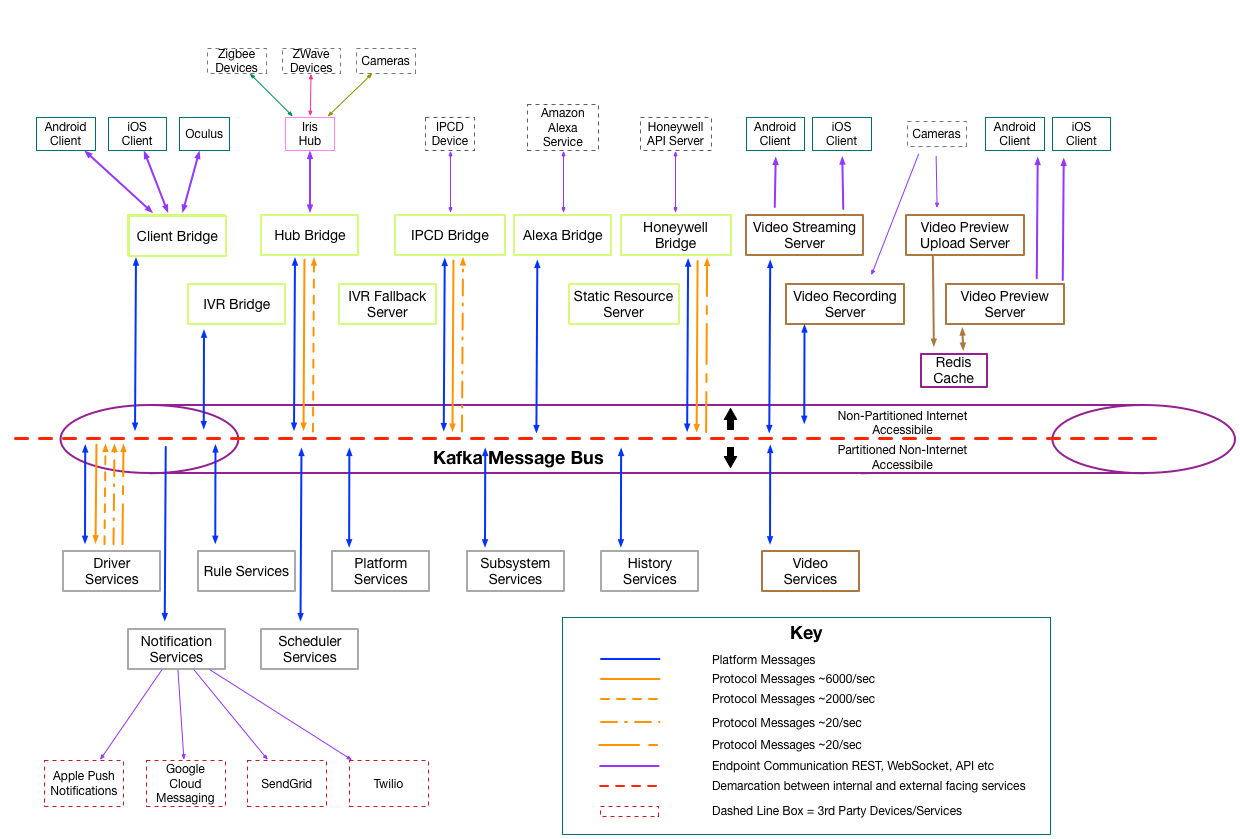

The Arcus Architecture

Arcus utilizes a technology stack that isn't uncommon today. Persistent storage is handled by Apache Cassandra, inter-service communication is handled by Kafka, and cluster management is handled by Apache Zookeeper. A deployment of Arcus Platform is split between 128 partitions, with each user being assigned a partition based on their Hub, allowing the system to handle a large number of concurrent users. The Hub acts as a gateway between the user's radio powered devices and the IRIS platform, with limited offline processing. Within Arcus Platform, various "bridge" services are responsible for taking requests between various internet services, while other services service these requests and place messages between various internal message buses. Each Arcus Platform service runs as a separate java process, inside of a separate container. These services can be deployed individually, and assigned various partitions through cluster management. Users are further assigned "populations", such as "general", "beta" and "qa" to support testing, along with various tiers of service to reflect Lowe's original pricing structure and "Premium" offering.

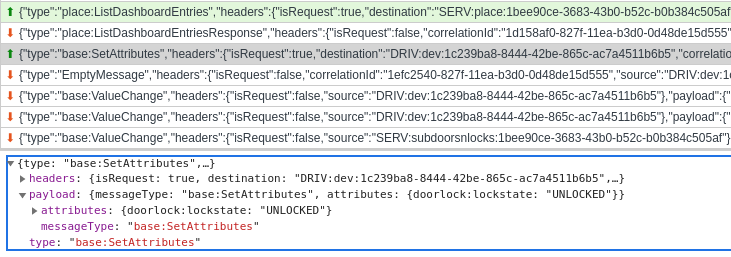

Users communicate with the system through websockets, sending well-defiend messages according to the capability model. For example, when a user wants to turn on a light switch, they would send a SetAttributes command with arguments 'swit:state=ON' to the respective device. Here's an example of traffic generated when a lock's status is changed:

Drivers & Reflexes

Arcus provides a groovy based driver model, similar to that available in Hubitat and SmartThings. These groovy drivers are loaded and processed by the drivers-service, which receives inbound protocol messages from the Hub via the "protocol" Kafka topic.

Sample driver code that is triggered on a ZWave "basic" report:

// ZEN26 reports local changes via basic report

onZWaveMessage.basic.report {

byte currState = message.command.get('value')

log.trace "Basic Report: {}", currState

GenericZWaveSwitch.handleSwitchState(this, DEVICE_NAME, currState)

}

In addition to the Groovy event-based drivers, the driver model can also specify reflexes, which allow for processing to occur on the hub, without the need to load 100s of groovy drivers. Reflexes are loaded as part of driver-service initialization and serialized to JSON. When a reflex is triggered on the Arcus Hub, the reflex can provide information like a motion or glass break sensor being tripped, which is in-tern handed off to the AlarmController, for local processing of Alarms. If the hub is offline during when a reflex is executed, the latest state will be delivered to the Arcus Platform once a connection is successful.

match reflex {

on iaszone, endpoint: 2, profile: 0xC216, set: ["alarm1"]

on amlifesign, endpoint: 2, profile: 0xC216, set: ["sensor"]

set Contact.contact, Contact.CONTACT_OPENED

}

Thanks to this driver model, it is fairly easy to port over drivers from other platforms. As a result, Arcus now supports the Zooz ZEN26 & ZEN27 smart-switches, the Ring Range Extender, the Ecolink Firefighter Smoke/CO Listener, and other devices new devices that were not supported in IRIS.

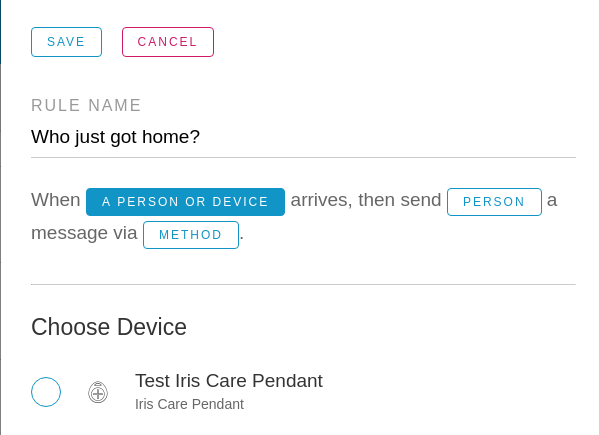

Rules & Device Query Language

Arcus provides a pre-determined set of templatized rules which can be used to automate. Arcus provides a simple means of filling out these rules, by automatically filtering which devices are supported. Internally, these rules are stored in an XML file describing satisfaction & trigger requirements, along with actions to be taken when the rule is triggered. The core of this rules engine is the Arcus Device Query language, which allows simple expressions to filter out devices that should be monitored or actioned on.

<device-query var="address"

query="doorlock:lockstate is supported AND doorlock:lockstate != 'LOCKED'">

<set-attribute to="${address}" name="doorlock:lockstate" value="LOCKED" />

</device-query>

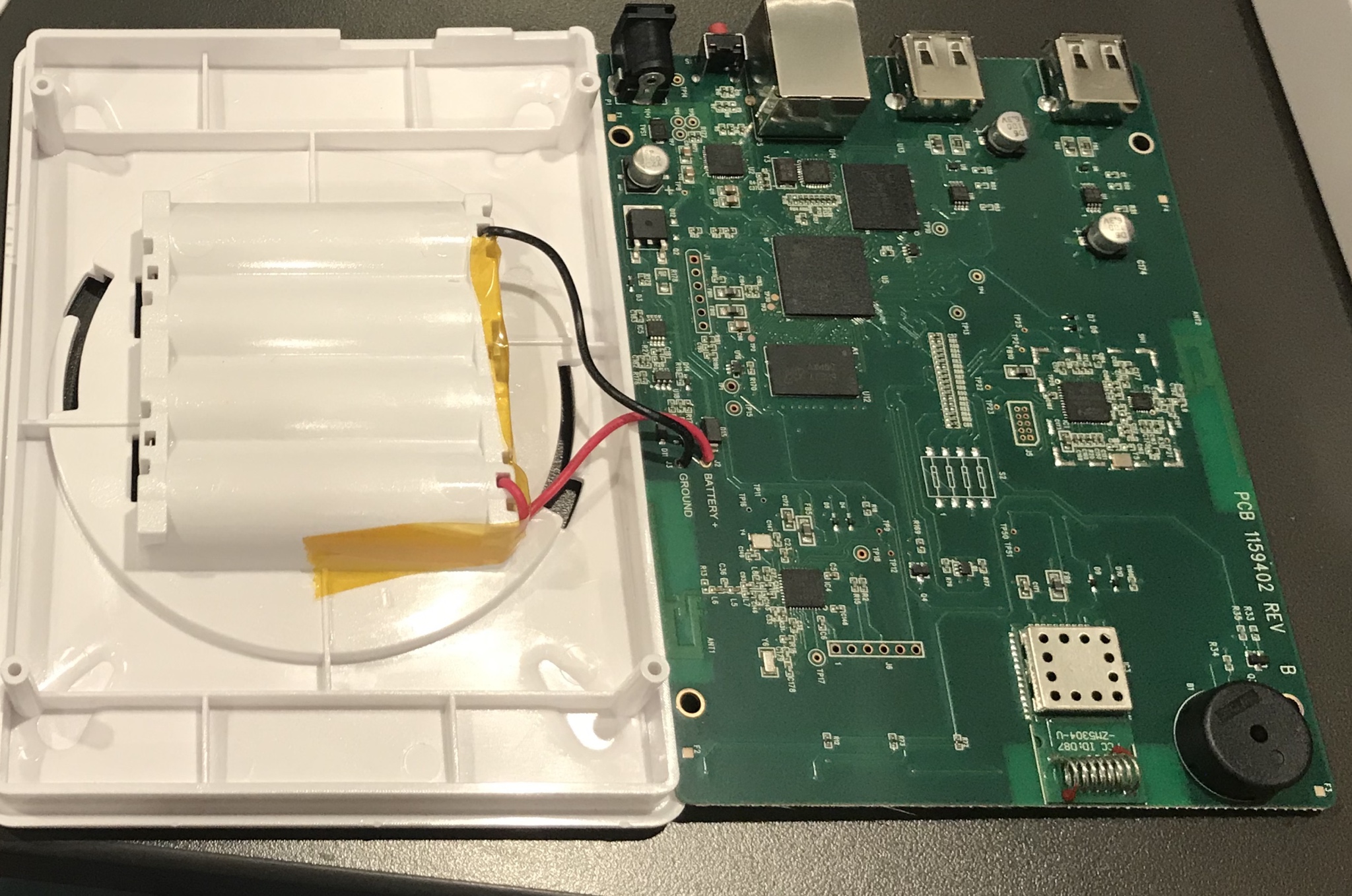

The Arcus Hub

Arcus is a "cloud" based platform, meaning that it depends on the internet and services running on more capable hardware to complete basic tasks. To connect Zigbee and Z-Wave devices that cannot directly communicate over the internet, a Hub with the appropriate Z-Wave and ZigBee radios is utilized. The IRIS team developed two hubs for the v2 platform (the v1 hub is no longer useful), both of which are usable today. The IRIS hub launched with IRIS v2 is based on the BeagleBone Black, with a custom board layout that includes Z Wave and ZigBee radios, backup power by the way of 4 AA batteries, and a few status LEDs. The second generation of hubs on the v2 platform is more custom and utilizes a NXP imx6DualLite for a platform. Both versions of the IRIS hub can be found cheap, with the original v2 hub going for the cost of shipping, and the v3 hub going for about $20 in an "IRIS (v2) Safe and Secure Kit". The IRIS team utilized Yocto Linux as a platform to create a custom "HubOS" distribution which could be provided to users of the IRIS Hub.

Upon bootup, the Arcus Hub runs a java application called the arcus "agent". This java software is responsible for communicating with the radios over serial communications, and the platform over TLS and WebSockets. In the event that the platform is offline or otherwise unreachable, the hub can do limited offline processing to ensure that alarm functionality still works. Due to limitations of the hub hardware, only certain devices are supported for local processing via "Reflexes".

The Arcus Hub checks in with the platform when it connects to determine if any updates are needed. The platform can choose to instruct the device to update the entire system (e.g. HubOS) or the Agent Application, depending on the needs. When the hub is upgraded, only one partition is upgraded at a time, allowing the hub to recover from failed updates. In the event that troubleshooting is required, the Hub can provide an SSH server for the user to log in to. In order to ensure that other users cannot access HubOS, the SSH server is only available when a USB drive with the appropriate hub key is present.

The Arcus Hub is shipped with a pre-generated X509.3 key & certificate which authenticates it to the platform. The MAC address of the Ethernet interface is covered into a Hub ID (e.g. LWF-3156) which is what the user enters into the system in order to "claim" the hub as part of the setup process. The Arcus Platform trusts a CA from Lowe's which ensures that only Hubs with the appropriate certificates are allowed to authenticate.

These hubs can easily be repurposed to work with Arcus and are likely to be able to run Home Assistant or other open-source home automation software for far cheaper than a Raspberry Pi based solution.

The path to production

The path to production was a difficult one, with many outages along the way. Problems with Kafka topic retention, availability of a single-instance of Zookeeper, Docker container management with shell scripts, and poor docker network connectivity caused many outages. The original configurations in Apache Marathon were not released as part of the open-sourcing of Arcus Platform, requiring a substantial amount of effort to understand the system and re-create this configuration. It took over a month to learn enough about Kubernetes, and a handful of other projects in the Kubernetes ecosystem to be able to bring up Arcus on Kubernetes. Unfortunately, the inmaturity of the Kuberenetes ecosystem (especially Istio) contributed to a large number of technical issues, like Cassandra replication.

Early on I decided to work with microk8s as it was fairly easy to get started with, but later switched to k3s from Rancher as it generally stayed more up to date and generally worked better with projects like Istio and MetalLB. Kustomize was selected to to handle templating of kuberentes configuration, along with a custom shell-script to facilitate a prompt based setup experience, that walks users through deploying a single-node k8s deployment. In retrospect, I probably would have settled for docker-compose for this project instead of using Kubernetes at all, but it was a valuable learning experience.

One particular challenge with productionizing Arcus was the use of a Kafka topic for metrics collection. Each service produces metrics to the "metrics" Kafka topic every 15 seconds - this metrics payload (uncompressed JSON) consumed a large amount of disk space, and without a strong understanding of Kafka's topic retention and no consumers of this data, meant that I was holding on to gigabytes of data that I did not need - quickly exhausting my Kafka volume space in Kubernetes. Due to the way that topic configuration is persisted and my Zookeeper k8s deployment that was unknowingly throwing away my Kafka configuration, it took considerable time to understand this issue and put in place meaningful restrictions to avoid Kafka disk-space exhaustion from creating outages.

Even when deployed reliably as single instance, Arcus was still very suspectible to issues with Kubernetes, like stuck pods, broken cert-manager, etc. This lead to a lot of trobubleshooting of k8s and tracking many issues in Istio, cert-manager, metallb, etc. that eventually got resolved as time went on.

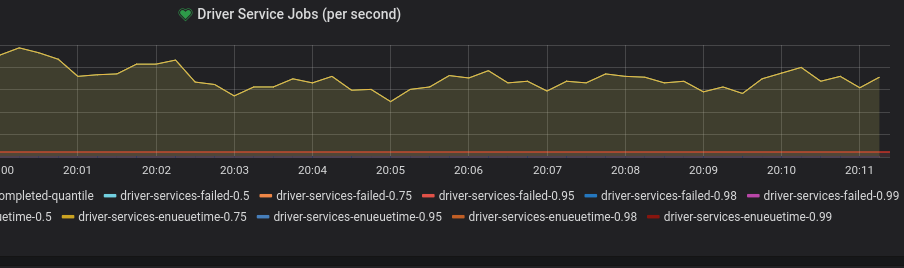

Monitoring

Arcus was open-sourced without including any of the existing Graphana dashboards or monitoring guides. As a result, it was a "reverse-engineering" effort of sorts to look through the metrics to understand how they could be utilized. As previously mentioned, metrics were logged to the "metrics" topic in Kafka, and then consumed by a service which stored them in KairosDB. As part of setting up monitoring in Arcus, I covered this system from a centralized monitoring topic to instead rely on prometheus, which collects metrics from each service directly. This removed the dependency on KairosDB which has seen less support from Grafana in recent years.

Arcus Multi-DC

Running a single instance of a complex production service on a single host leaves great exposure to risk. Hardware failures, network issues, and operator error can easily cause downtime, putting many functions of the system offline. For this reason, many systems that put high-availability support running multiple instances to some degree. My initial goal was to deploy Arcus to two preemptive GKE environments, but the complexity of such a system became overwhelming and I ended up settling for a on-prem deployment to keep costs lower. Arcus utilizes a mix of active-active and active-passive models to allow clients to connect to any instance of a client or hub bridge while dedicating specific instances to processing certain tasks to avoid duplicate processing of events. Arcus originally used Cassandra as a cluster coordinator, but this was replaced with Zookeeper in 2020 as part of making Arcus multi-dc once again. The current implementation allows Arcus to gracefully fail-over to another availability region in the event of trouble.

To ensure that clients and hubs are always able to connect to running instances of Arcus Platform, I decided to use Route53's health-checked DNS. In this environment, each availability region has a DNS record associated with it and traffic is normally split in a weighted fashion. In the event of an outage, the healthcheck will fail and the record will be removed. Clients will then connect to whatever region is available until traffic is rebalanced. While a network load balancing approach would have been quicker to respond to outages, it was difficult to find one that would meet the price-sensitive requirements for this project. Additionally, simple HTTPs load-balancing would not work as hubs utilize mTLS to authenticate themselves to the platform.

I eventually settled on a mix of Docker Compose and Kuberenetes to deploy Arcus. Stateful services run within docker on separate VM infrastructure, and are replicated to three separate locations. Thanks to low inter-DC latency, I chose to deploy Kafka in a "stretch cluster", with a minimal set of in-sync replicas required. Initially, Kafka consumed a large amount of network cross-dc bandwidth (e.g. 25GB/day), but after upgrading to Kafka 2.4 I was able to enable follower-fetching and compression, which significantly reduced network utilization.

In the future, better options are needed to support mutli-dc deployments so that deployments can be more easily rolled out and rolled back.

Arcus Apps and Web Site

The Arcus open-source project also included the original IRIS iOS and Android apps, with a few minor omissions, mainly for code that appears to have not been appropriately licensed (e.g. WWDC sample code). With appropriate attribution, these apps have been restored to their original condition, and while not in any App Stores are still seeing use. Unfortunately the Arcus iOS App is a Swift 3 project, meaning that XCode 10.1, released in October 2018, is the latest version that can build and run the Arcus App.

The Arcus Web App was originally developed by Bitovi on top of DoneJS as a websockets client. The app is fully responsive and follows component-based development, where each device, panel, or element is separated and available for development and testing. You can read more about the the Arcus Web app through the case study that Bitovi put together.

In Conclusion

Nearly a year later, WLNet is running Arcus for a small number of people with relatively high-availability. Given the size and complexity of Arcus Platform, maintaining it is non-trivial. In the future, there are plans to add a web IDE for rule management, multi-factor authentication, transaction intent verification, support for mqtt to allow integration with other smart-home platforms (e.g. Home Assistant) while improving overall platform reliability. While the future is uncertain for where Arcus will go, the process of bringing IRIS back to life has been a valuable learning experience and shows what can be done if a company decides to open-source their software as part of leaving a business segment.

While probably not suitable for an individual that does not have a home lab, should a motived individual or organization wish to bring Arcus back, they would be able to do with a fraction of the investment required to start from scratch. Had an organization taken over as soon as Arcus was open-sourced, they likely could have provided a transition period for existing IRIS customers.

The Arcus source code is available on https://github.com/arcus-smart-home

This project has been very beneficial to me in understanding technologies like Kuberentes, Kafka, Cassandra, Zookeeper, Yocto Linux, and many others. The need to support this platform in a highly-available fashion for a small set of users has also provided an opprotunity to practice good operational practices and learn and observe from failures.

Special Thanks To...

I'd like to give a special thanks to Mike Silverman, Paul Couto, Erik Anderson, Chris LaPre, and Jamone Kelly of the IRIS team, who were all helpful in answering questions about Arcus and put in many contributions of their own, whether at Lowe's or independently. I'd also like to thank thegillion who put together many videos covering topics on IRIS by Lowes and was an active member of the Living with IRIS forum. Finally, I'd like to thank everyone who sent in various IRIS products for testing and validation with Arcus Platform.